Alfred Alias Hack 08 Feb 2024

I live by Alfred as a launcher and all around insta-utility thing. I also live by whatever terminal I am currently using. Switching from Terminal.app to iTerm.app was easy enough in Alfred, just need to hit the down arrow after trying to launch it 2-3 times and it picks up that ⌘-spc t e r means iTerm.app not Terminal.app and we're off to the races. This works as iTerm nicely has t e r in it, so Alfred picks it up as I type t e r.

Ghostty.app does not include t e r so I have been launching iTerm, quitting iTerm, launching Ghostty, on repeat, all the time. So, the hack to convince Alfred that ⌘-spc t e r means "launch ghostty" (as I have given up on reprogramming my fingers) is as follows:

Create an app alias (in the Finder, right click on Ghostty.app -> Make Alias) and name it "Ter Ghostty.app". This makes a special "MacOS Alias file", not a symlink. Fire up Alfred Preferences and tell it to also search com.apple.alias-file files. This is buried in Default Results -> Advanced. You can drag and drop the newly created alias file into the list to do it, or type carefully.

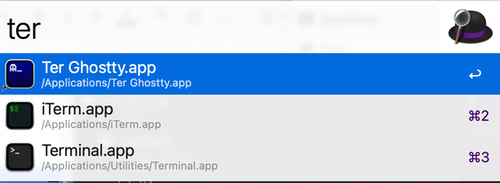

At that point, Alfred will see the alias and start showing it for default results, and with a few iterations will pick up on th efact that ⌘-spc t e r means Ter Ghostty.app not iTerm.app or so forth:

This is also useful for any other case where you want to change a default your fingers have memorized (Chrome -> Safari, Vim -> Emacs, Notes -> Obsidian, and maybe someday, Emacs -> Zed).

Ghostty, emacsclient, and terminfo 04 Feb 2024

I've been lucky enough to get into the Ghostty beta, and am very happy with it o far. I ran into one real hiccup, and I know I'll forget how I fixed it when I set up my next laptop, so leaving a note for myself (and anyone else who hits it).

Ghostty uses a custom terminfo, xterm-ghostty, and does not install it to the system or user, but specifies a TERMINFO=/Applications/Ghostty.app/Contents/Resources/terminfo environment variable in the process for Ghostty itself. This mostly works great, but not in cases where something started outside Ghostty cares about the terminal capabilities -- such as an emacs daemon :-(

When I started, I was using an emacs daemon controlled by homebrew services as I more or less forget everything about launchd shortly after doing anything with it. Homebrew is clever and replaces the launchd plist every time you start the service, so you cannot edit, boo. I get it, keeps people from breaking things, but boo. So, Step 1, stop using homebrew services to interface to launchd to start my emacas daemon.

Step 2: add my own launchd plist thingie at ~/Library/LaunchAgents/ghostty.emacs.plist. It is derived from the one homebrew uses, which is derived form the venerable mxcl version:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>EnvironmentVariables</key>

<dict>

<key>TERMINFO</key>

<string>/Applications/Ghostty.app/Contents/Resources/terminfo/</string>

</dict>

<key>KeepAlive</key>

<true/>

<key>Label</key>

<string>ghostty.emacs</string>

<key>LimitLoadToSessionType</key>

<array>

<string>Aqua</string>

<string>Background</string>

<string>LoginWindow</string>

<string>StandardIO</string>

<string>System</string>

</array>

<key>ProgramArguments</key>

<array>

<string>/opt/homebrew/opt/emacs/bin/emacs</string>

<string>--fg-daemon</string>

</array>

<key>RunAtLoad</key>

<true/>

</dict>

</plist>

I changed the label and added the EnvironmentVariables section setting TERMINFO. I stopped the brew service for emacs, started this one, and voila, emacsclient works again: launchctl load -w ~/Library/LaunchAgents/ghostty.emacs.plist :-)

This feels like a somewhat hacky fix, and I think the better way is probably to install the terminfo file for the local user, the way kitty does -- but I want to grok why Mitchell is not doing that before I muck with that!

Epithet, Briefly 30 Nov 2023

Since Bob asked, I'm cleaning up a system to make using short-lived ssh certificates easy, simple, and secure.

The gist is to use a custom ssh-agent which, in turn, uses some modern authn service (OIDC, Keycloak, Okta, Google Sign-In, etc) to authenticate you. It then passes that auth token up to a CA which relies on a policy service to verify the auth token and respond with cert params (time, principals, extensions, whatever is needed). The CA uses the policy provided attributes to generate a short lived cert, which it gives back to the agent. Voila, you can access things for a couple minutes.

There is a real sequence diagram of what is going on in the repo.

CA

The CA is dirt simple and is the only thing that will ever see the CA private key. The same CA service should be usable by more or less anyone, maybe with some variety in terms of where the private key is stored, but that is it.

The CA receives an opaque auth token + pubkey from the agent and responds with a cert. The existing implementation uses a policy service to decide whether to issue the cert, and what attributes to give it.

The actual CA was kept as simple as possible, because it is a CA. You don't want to muck with it much.

Policy

The policy service consumes the opaque auth tokens from the CA and reponds with basic info, assuming the auth is valid:

{

"identity":"brianm@skife.org",

"principals":["oncall_fluffy", "oncall_wibble", "wheel"],

"expiration":"2m",

"extensions": {

"permit-pty":true,

"permit-port-forwarding":true

}

}

The policy server (single endpoint, takes a post with the context for the request) is nice as it can be dynamic and make use of risk models and various other signals to decide what to do: eg, "is it a deploy server asking for a key? Is there actually a deploy in progress matching the identity requesting the cert? Okay, give it a 5 minute cert" on the sophisticated side; simply checking an OIDC token for validity on the simpler side.

Agent

The ssh agent generates a new keypair on startup and never lets the private key leave memory. It has a small GRPC API over a domain socket for interfacing with authentication mechanisms, such as a helper to simply feed it tokens over stdin for simple cases. We popped a browser (or used a cli tool) to do the Okta dance.

Michael and I made this at a previous company. We spun up the CA in one lambda function, the policy server in another, and authed against Okta. I want it for my own stuff now and it doesn't look like anyone there is maintaining it anymore, so I have been bringing it back to life.