I'm replacing my general/util server at home and want to manage VMs on the new host with vm-bhyve. Setting it up is well documented, but running linux VMs still steers folks towards grub, which is not really great. These are just my notes (for later, after I forget) on using cloud images and uefi. This is a supplement to the docs, not a replacement!

I created a template, creatively named brian-linux.conf:

loader="uefi"

cpu=2

memory=4G

network0_type="virtio-net"

network0_switch="public"

disk0_type="nvme"

disk0_dev="sparse-zvol"

disk0_name="disk0"

I'm running on ZFS, so can use sparse-zvol for storage to make disk space only soft allocated, letting me overcommit.

Now, the commands to make things happy and run some cloud images:

# Additional dependencies for vm-bhyve

pkg install cdrkit-genisoimage # to use cloud-init

pkg install qemu-tools # to use cloud images

pkg install bhyve-firmware # to use uefi

# Download some cloud images to use for our VMs

vm img https://dl-cdn.alpinelinux.org/alpine/v3.19/releases/cloud/nocloud_alpine-3.19.1-x86_64-uefi-cloudinit-r0.qcow2

vm img http://cloud-images.ubuntu.com/noble/current/noble-server-cloudimg-amd64.img

vm create \ # C-k off comments if you copy this

-t brian-linux \ # our template, from above

-i noble-server-cloudimg-amd64.img \ # the cloud image

-s 50G \ # disk size override

-c 4 \ # cpu count override

-m 32G \ # memory override

-C -k ~brianm/.ssh/id_rsa.pub \ # cloud-init ssh pubkey

v0001 # vm name

Docs cover these, but reminding myself of how I configured it beyond defaults:

vm switch vlan public 4 # use vlan 4 for VMs

vm set console=tmux # tmux for console access

Nice side effect of using cloud-init is that it logs the DHCP assigned IP address as well, so I can fire up the console and go search for it (C-b C-[ C-s Address)! Probably should write a script to extract it, to be honest.

I live by Alfred as a launcher and all around insta-utility thing. I also live by whatever terminal I am currently using. Switching from Terminal.app to iTerm.app was easy enough in Alfred, just need to hit the down arrow after trying to launch it 2-3 times and it picks up that ⌘-spc t e r means iTerm.app not Terminal.app and we're off to the races. This works as iTerm nicely has t e r in it, so Alfred picks it up as I type t e r.

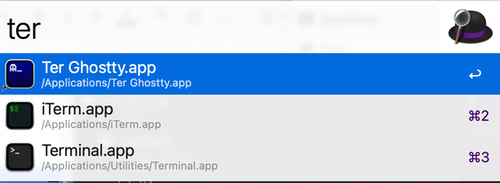

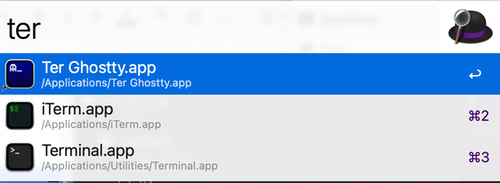

Ghostty.app does not include t e r so I have been launching iTerm, quitting iTerm, launching Ghostty, on repeat, all the time. So, the hack to convince Alfred that ⌘-spc t e r means "launch ghostty" (as I have given up on reprogramming my fingers) is as follows:

Create an app alias (in the Finder, right click on Ghostty.app -> Make Alias) and name it "Ter Ghostty.app". This makes a special "MacOS Alias file", not a symlink. Fire up Alfred Preferences and tell it to also search com.apple.alias-file files. This is buried in Default Results -> Advanced. You can drag and drop the newly created alias file into the list to do it, or type carefully.

At that point, Alfred will see the alias and start showing it for default results, and with a few iterations will pick up on th efact that ⌘-spc t e r means Ter Ghostty.app not iTerm.app or so forth:

This is also useful for any other case where you want to change a default your fingers have memorized (Chrome -> Safari, Vim -> Emacs, Notes -> Obsidian, and maybe someday, Emacs -> Zed).

I've been lucky enough to get into the Ghostty beta, and am very happy with it o far. I ran into one real hiccup, and I know I'll forget how I fixed it when I set up my next laptop, so leaving a note for myself (and anyone else who hits it).

Ghostty uses a custom terminfo, xterm-ghostty, and does not install it to the system or user, but specifies a TERMINFO=/Applications/Ghostty.app/Contents/Resources/terminfo environment variable in the process for Ghostty itself. This mostly works great, but not in cases where something started outside Ghostty cares about the terminal capabilities -- such as an emacs daemon :-(

When I started, I was using an emacs daemon controlled by homebrew services as I more or less forget everything about launchd shortly after doing anything with it. Homebrew is clever and replaces the launchd plist every time you start the service, so you cannot edit, boo. I get it, keeps people from breaking things, but boo. So, Step 1, stop using homebrew services to interface to launchd to start my emacas daemon.

Step 2: add my own launchd plist thingie at ~/Library/LaunchAgents/ghostty.emacs.plist. It is derived from the one homebrew uses, which is derived form the venerable mxcl version:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>EnvironmentVariables</key>

<dict>

<key>TERMINFO</key>

<string>/Applications/Ghostty.app/Contents/Resources/terminfo/</string>

</dict>

<key>KeepAlive</key>

<true/>

<key>Label</key>

<string>ghostty.emacs</string>

<key>LimitLoadToSessionType</key>

<array>

<string>Aqua</string>

<string>Background</string>

<string>LoginWindow</string>

<string>StandardIO</string>

<string>System</string>

</array>

<key>ProgramArguments</key>

<array>

<string>/opt/homebrew/opt/emacs/bin/emacs</string>

<string>--fg-daemon</string>

</array>

<key>RunAtLoad</key>

<true/>

</dict>

</plist>

I changed the label and added the EnvironmentVariables section setting TERMINFO. I stopped the brew service for emacs, started this one, and voila, emacsclient works again: launchctl load -w ~/Library/LaunchAgents/ghostty.emacs.plist :-)

This feels like a somewhat hacky fix, and I think the better way is probably to install the terminfo file for the local user, the way kitty does -- but I want to grok why Mitchell is not doing that before I muck with that!